First CCI Mini-Workshop on Theoretical Foundations of Cyber-Physical Systems

Friday, April 14, 2017

EEB 132

9am – 12pm

This was a first-of-its-kind event consisting of research talks by several Viterbi CCI faculty working on theoretical aspects of Cyber-Physical Systems from many different disciplinary perspectives including formal methods for software, control theory, optimization, signal processing, information theory, machine learning, and contract-based design.

The following is a summary of the talks presented at this mini-workshop.

Mihailo Jovanovic (Electrical Engineering) talked about the design of controllers for distributed plants with interaction links. He gave an example of the challenges associated with integrating different power grids. One challenge is to identify the signal exchange network, which invokes a challenge between performance and sparsity. He pointed at augmented lagrangian algorithms which introduce additional auxiliary variables that decouple different terms. He concluded by discussing his work on physics-aware matrix completion.

Insoon Yang (Electrical Engineering) talked about control given risk with uncertain distribution in IoT systems. New risky components are introduced in the traditional control systems in IoT enabled systems (imperfect data and statistical models, humans in the loop, external disturbances, randomness in communication). A central question is “How can we design a controller that is robust against errors in the probability distribution of uncertainties?” The optimization problem of interest becomes a Lagrangian combination of expected cost and risk, and is solved using a bilevel optimization formulation with duality-based dynamic programming. He discussed applications to energy control, smart home, smart grids, safety-critical systems, etc. and a new course he is introducing this fall on Data-Driven Optimization and Control.

Yan Liu (Computer Science) talked about her work on Machine Learning for Big Time Series Data. Her group is looking at developing scalable and effective solutions for analyzing time series data by leveraging recent progress across disciplines – including granger graphical models, point process models, deep neural networks and reinforcement learning, low rank tensor analysis and functional data analysis. She described her work on health care data – major challenges include handling missing data and making inferences understandable to doctors. For explainable AI, they are working on a mimic learning framework where a trained deep learning model is used to train a simpler mimic “student” model (this could be decision tree – gradient boosting tree) that is easier to interpret.

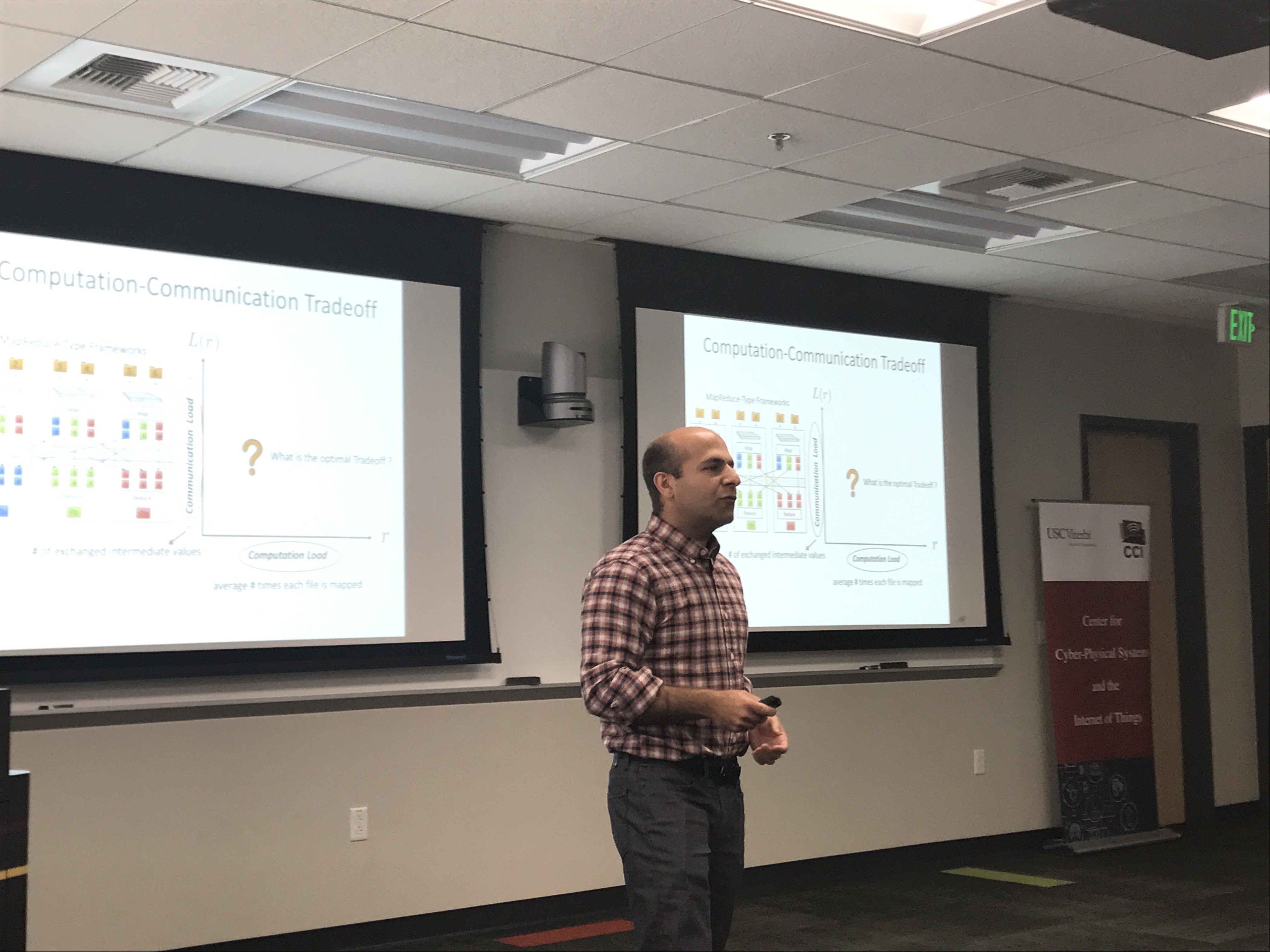

Salman Avestimehr (Electrical Engineering) talked about his recent and ongoing work on coded distributed computing. This is of important for developing distributed and cloud computing infrastructures for CPS. A key question is how to optimally trade-off network resources: resources such as storage, communication, computation, time/energy. He discussed their work on the computation-communication tradeoff for mapreduce-type decomposition: today’s systems give a linear tradeoff between communication and computation load, while the coded computing techniques he is developing give a dramatically better performance (where the communication load varies inversely with the computation load).

Pierluigi Nuzzo (Electrical Engineering) talked about improving the design process for CPS. His interest is in the theoretical foundation of design. Today’s state of the art isa V diagram: top down design from system architecture and subsystem design to component design and bottom up testing and validation from component testing to subsystem testing to verification and validation. His goal is to develop a rigorous system engineering framework that can improve design quality and productivity and reduce cost, by enabling design-space exploration across different domains in a scalable way. He described the contract-based design methodology that he has been developing, which includes mechanisms for distributing verification. Contracts are “assume-guarantee” pairs. He is developing CHASE, a framework for contract-based requirement engineering, and ArchEx, an architecture exploration toolbox.

Antonio Ortega (Electrical Engineering) talked about his research area of Graph Signal Processing. This generalizes many aspects of traditional signal processing to graphs (generalizing the notions of frequency, transforms, and filtering). He described applications including sensor networks, social networks, and image processing. They are developing techniques for denoising, filtering, sampling and reconstruction. He described his group’s work on applying these tools to machine learning – optimizing what to label in a given data-set. Other applications they have explored includes learning the best attractive Gaussian Markov Random Field model given data. These techniques are relevant to CPS because such systems are large and irregular in space and time, and require sampling and interpolation, involve variable topologies, data reduction to reduce complexity and control and optimization.

Ketan Savla (Civil and Environmental Engineering) talked about his perspectives on Theoretical aspects of CPS. His goal is to develop computational tools (from operations research, network science, decision theory) and integrate them with constraints from the physical world in the context of applications (physics, human behavior, and dynamics). He has been exploring optimization, queueing systems, network flows and game theory taking into account constraints and models of motion kinematics, psychology, dynamics and physics. The models that result are more sophisticated and one can analyze stability, robustness and optimal control. He described his work on state-dependent queues – arising from human-in-the-loop systems. They worked with psychologists to develop relevant models with internal dynamics, and analyzing these systems result in different policies – now it might even be beneficial to idle the server in some cases. E.g., for humans, it may be optimal to keep them busy only about 70% of the time. In these systems throughput depends on the second moments, and surprising they are higher in stochastic environments (more variation in jobs, the more productive the humans).

Chao Wang (Computer Science) talked about his work on formal methods in software engineering. He is interested in verification and synthesis of software. Are there applications where automatically generated code derived from specifications can be useful? He has worked on hardware verification, embedded software, concurrent software and CPS/IoT applications. He gave an illustration of designing traffic controllers. The system is complicated, but there are some critical properties that must not be violated, such as “crossing roads must not be simultaneously green”. The idea is to build a reactive system “shield” that is a sophisticated finite state machine is synthesized from the specification that modifies the output of the run-time system to ensure the specification is met. Formally, it can be described as a two-player safety game – solved to yield a winning strategy, where no matter how the error is introduced, bad states are avoided. This work can be applied for safety certification, because it is sufficient to certify the shield rather than the design.